The Evolution of Openness: A Deep Dive into Meta’s Llama LLM Series

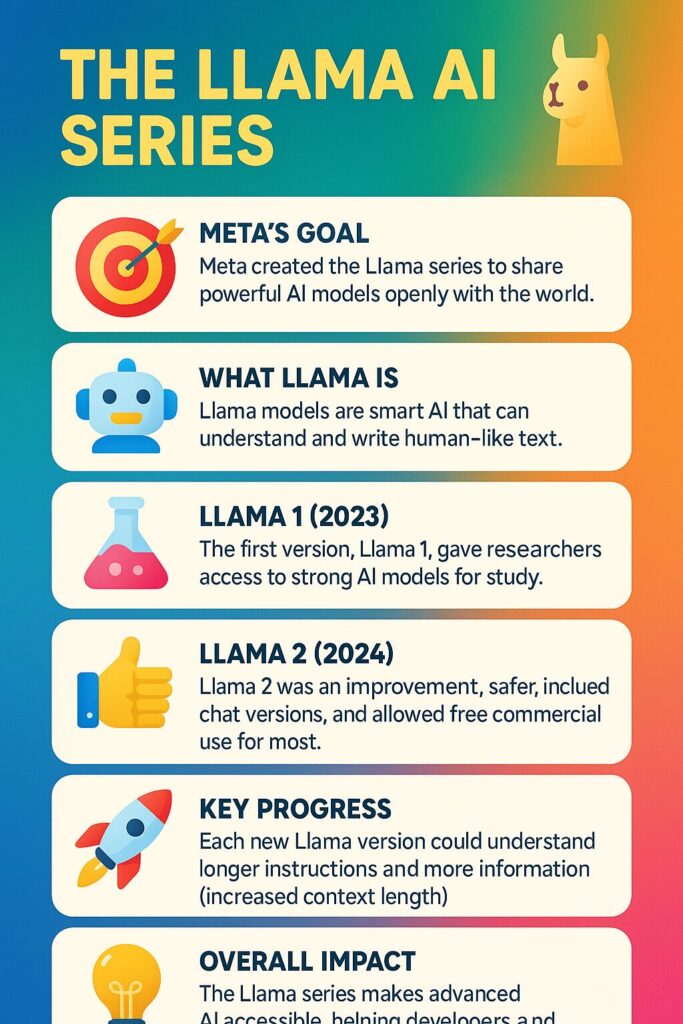

The landscape of artificial intelligence, particularly in the realm of large language models (LLMs), has been marked by rapid innovation and a persistent tension between closed, proprietary systems and more open approaches. In this dynamic environment, Meta AI has emerged as a significant proponent of open science, primarily through its Llama series of LLMs. Standing for Large Language Model Meta AI, Llama represents a concerted effort to democratize access to powerful generative AI tools, fostering research, development, and innovation across the global community.

From its initial release aimed at researchers to its latest iteration challenging the state-of-the-art across various benchmarks, the Llama family has undergone a remarkable evolution. This article delves into the journey of Llama, exploring the key characteristics, advancements, and impact of each major version – Llama 1, Llama 2, and the current flagship, Llama 3 – concluding with a comparative analysis of their features.

Chapter 1: The Genesis – Llama 1 (The Foundation for Open Research)

Release Date: February 2023

Context: In early 2023, the LLM field was largely dominated by massive, closed models like OpenAI’s GPT-3 and Google’s PaLM. Access to these models was often restricted, API-based, and the underlying architecture and training data were typically opaque. This created significant barriers for academic researchers and smaller organizations wanting to study, understand, and build upon these powerful technologies.

Meta’s Objective: Meta AI sought to address this gap. Their stated goal with Llama 1 was not necessarily to create the single largest or most performant model, but to provide the research community with a set of capable, reasonably sized foundation models. The idea was that smaller, well-trained models could be studied, fine-tuned, and deployed more readily without requiring the colossal computational resources commanded by tech giants.

Key Features and Technical Details:

- Model Sizes: Llama 1 was released in four sizes: 7 billion, 13 billion, 33 billion, and 65 billion parameters. This range offered flexibility, allowing researchers to choose a model that fit their computational budget and specific task requirements. The 7B model, in particular, demonstrated that impressive performance could be achieved on a single GPU.

- Architecture: Llama 1 employed a standard Transformer architecture, similar to other contemporary LLMs, but incorporated several key improvements that had become popular in the field:

- Pre-normalization (RMSNorm): Instead of normalizing the output of a layer, Llama normalized the input, using Root Mean Square Layer Normalization (RMSNorm) for improved training stability and performance.

- SwiGLU Activation Function: Replacing the commonly used ReLU activation function with SwiGLU (a variant of Gated Linear Units) was found to enhance performance.

- Rotary Positional Embeddings (RoPE): Instead of using absolute positional embeddings, Llama incorporated RoPE to inject relative positional information at each layer, improving generalization on longer sequences.

- Training Data: Llama 1 was trained on a massive dataset comprising 1.4 trillion tokens sourced exclusively from publicly available data. This included sources like Common Crawl, C4, GitHub, Wikipedia, ArXiv, and Stack Exchange. Crucially, Meta emphasized that no proprietary Meta user data was used. The focus was on text, primarily English, with some representation from other languages with Latin or Cyrillic alphabets.

- Performance: Despite its relatively smaller parameter counts compared to giants like GPT-3 (175B) or PaLM (540B), Llama 1 demonstrated remarkable performance. The Llama-65B model was competitive with models like DeepMind’s Chinchilla (70B) and Google’s PaLM (540B) on many benchmarks, while even the Llama-13B model outperformed GPT-3 (175B) on several tasks. This highlighted the efficiency of its training and architecture.

- Licensing and Release: Llama 1 was initially released under a non-commercial license, specifically targeted at researchers, academic institutions, government entities, and civil society organizations. The weights were granted on a case-by-case basis upon application. However, shortly after its release, the model weights were leaked online and became widely accessible, inadvertently accelerating its adoption and experimentation within the broader developer community, albeit outside the intended licensing framework.

Impact: Llama 1 was a landmark release. It proved that highly capable LLMs could be trained efficiently and made accessible (even if partly unintentionally) to a wider audience. It catalyzed a surge in open-source LLM research and development, inspiring numerous fine-tuning efforts and derivative models (like Alpaca, Vicuna) that explored instruction following and conversational abilities.

Chapter 2: Scaling Up and Opening Doors – Llama 2

Release Date: July 2023

Context: Building on the success and learnings from Llama 1, and acknowledging the community’s hunger for more openly accessible models, Meta prepared its next major iteration. The landscape had continued to evolve, with increased focus on model safety, alignment, conversational abilities, and the potential for commercial applications of open models.

Meta’s Objective: With Llama 2, Meta aimed to push the boundaries further in terms of performance, safety, and accessibility. A key strategic move was partnering with Microsoft, making Llama 2 available on Azure and Windows, and adopting a much more permissive licensing model suitable for commercial use.

Key Features and Technical Details:

- Model Sizes: Llama 2 was released in 7B, 13B, and 70B parameter sizes. Notably, the 34B parameter size mentioned in some research contexts wasn’t part of the initial public release, though variations might exist. Alongside the base pre-trained models, Meta also released fine-tuned “Llama 2-Chat” variants optimized for dialogue use cases.

- Architecture: Llama 2 retained the core architectural improvements of Llama 1 (RMSNorm, SwiGLU, RoPE). A significant enhancement, particularly for the larger 70B model, was the introduction of Grouped-Query Attention (GQA). GQA speeds up inference time and reduces the memory bandwidth requirements for attention computations by sharing key and value projections across multiple query heads, without significantly impacting quality.

- Training Data: The training corpus was expanded and refined. Llama 2 was trained on 2 trillion tokens – a 40% increase over Llama 1 – using a new mix of publicly available online data. Meta stated efforts were made to remove data from sites known to contain high volumes of personal private information and to up-sample factual sources to increase knowledge and reduce hallucinations.

- Context Length: A crucial improvement was doubling the context length from Llama 1’s 2048 tokens to 4096 tokens. This allows the model to process and maintain information over longer prompts and conversations, enhancing its utility for tasks like document summarization, lengthy Q&A, and extended dialogue.

- Fine-tuning and Safety: Significant emphasis was placed on safety and helpfulness, especially for the Llama 2-Chat models. These models underwent extensive supervised fine-tuning (SFT) and reinforcement learning with human feedback (RLHF). Meta detailed its use of techniques like safety-specific data annotation, safety reward models, and context distillation. They also introduced Ghost Attention (GAtt), a method to help the model maintain focus on initial instructions throughout a multi-turn conversation.

- Performance: Llama 2 demonstrated substantial improvements over Llama 1 across a wide range of external benchmarks, including reasoning, coding, proficiency, and knowledge tests. The Llama 2 70B model showed performance comparable to closed-source models like GPT-3.5 on several metrics, and the Llama 2-Chat variants often outperformed open-source chat models available at the time.

- Licensing and Release: This was perhaps the most significant shift. Llama 2 was released under a custom, permissive license that allowed for both research and commercial use, free of charge. The only major restriction was for companies with over 700 million monthly active users, who would need to seek a specific license from Meta. This move dramatically opened up the potential for businesses and startups to build applications on top of a powerful, open foundation model.

Impact: Llama 2 cemented Meta’s position as a leader in the open-source AI movement. Its permissive license, combined with strong performance and dedicated chat variants, led to widespread adoption in both academia and industry. It became a foundational model for countless fine-tuning projects, specialized applications, and commercial services, significantly lowering the barrier to entry for building with sophisticated AI.

Chapter 3: Reaching New Heights – Llama 3 (The Current State-of-the-Art)

Release Date: April 2024

Context: By early 2024, the pace of LLM development had not slowed. Models were becoming increasingly capable, particularly in complex reasoning, coding, and nuanced instruction following. The demand for even more powerful, efficient, and safe open models continued to grow, alongside a desire for better multilingual capabilities and larger context windows.

Meta’s Objective: With Llama 3, Meta aimed to deliver best-in-class performance for open-source models at their respective scales. The goal was to create models that were not just competitive, but state-of-the-art on industry benchmarks, significantly improving helpfulness, reasoning abilities, code generation, and overall instruction following, while continuing Meta’s commitment to responsible open release.

Key Features and Technical Details:

- Model Sizes (Initial Release): The initial release of Llama 3 included instruct-tuned models in two sizes: 8 billion and 70 billion parameters. Meta explicitly stated that much larger models, including one exceeding 400 billion parameters, are currently in training and slated for future release. These larger models are expected to introduce new capabilities, potentially including multimodality.

- Architecture: Llama 3 builds upon the successful Llama 2 architecture, incorporating further refinements. While specifics like GQA are confirmed for both 8B and 70B models, a key visible change is the use of a new tokenizer with a significantly larger vocabulary of 128,000 tokens (compared to Llama 2’s 32,000). This larger vocabulary allows for much more efficient encoding of text, potentially leading to better performance, especially on multilingual tasks and in understanding code structure.

- Training Data: This is perhaps the most dramatic upgrade. Llama 3 was trained on an enormous, newly curated dataset comprising over 15 trillion tokens – more than 7 times the data used for Llama 2 and reportedly one of the largest datasets ever assembled for LLM training. This data was sourced from publicly available online sources and includes a significantly larger proportion of non-English data (over 5% high-quality non-English data covering 30+ languages) and a quadrupling of code data compared to Llama 2. Meta emphasized extensive data filtering pipelines (using heuristic filters, NSFW filters, semantic deduplication, and text classifiers trained on Llama 2 to predict data quality) to ensure high quality and diversity.

- Context Length: Llama 3 models were trained with an 8192-token context length by default, double that of Llama 2. Furthermore, architectural improvements like RoPE scaling might allow for effective extrapolation to even longer context windows during inference.

- Fine-tuning and Safety: Meta refined its post-training procedures for Llama 3 instruct models, combining SFT, rejection sampling, proximal policy optimization (PPO), and direct policy optimization (DPO). This combination aimed to enhance reasoning, coding abilities, and overall response quality while improving safety and reducing refusal rates for safe prompts. Meta also introduced updated safety tools like Llama Guard 2, Code Shield, and CyberSec Eval 2 alongside the model release.

- Performance: Llama 3 set a new standard for open-source models at the 8B and 70B scales upon its release. Meta reported state-of-the-art performance on numerous standard benchmarks (like MMLU, GPQA, HumanEval, GSM-8K), outperforming comparable open models and even competing strongly with leading proprietary models in certain areas. Users and independent evaluations generally confirmed significant improvements in reasoning, code generation, following complex instructions, and generating more diverse and higher-quality text compared to Llama 2.

- Licensing and Release: Llama 3 continues Meta’s open approach, released under a new “Llama 3 License” which largely mirrors the permissive structure of the Llama 2 license, allowing free use for research and commercial purposes, likely retaining a similar threshold for very large companies requiring a separate agreement. The models were made broadly available through platforms like Hugging Face, cloud providers (AWS, Google Cloud, Azure), and hardware platforms (NVIDIA, Intel, AMD). Llama 3 also powers the latest generation of the Meta AI assistant integrated into Facebook, Instagram, WhatsApp, and Messenger.

Impact and Future: Llama 3 represents a major leap forward, solidifying the role of open-source models as powerful alternatives to proprietary systems. Its enhanced capabilities, particularly in reasoning and coding, combined with its permissive license, make it an incredibly attractive foundation for developers and researchers. The promise of even larger, potentially multimodal Llama 3 models in the future suggests that Meta intends to keep pushing the boundaries of open AI development.

Chapter 4: Comparative Analysis – Llama Versions Side-by-Side

The evolution from Llama 1 to Llama 3 showcases a clear trajectory of increasing scale, capability, and openness. Key trends include:

- Exponential Data Growth: The training dataset size exploded from 1.4T tokens (Llama 1) to 2T (Llama 2) and then dramatically to over 15T (Llama 3), reflecting the understanding that high-quality, diverse data at massive scale is crucial for performance.

- Expanding Context Windows: The ability to handle longer inputs doubled with each generation, from 2048 (Llama 1) to 4096 (Llama 2) to 8192 (Llama 3), enhancing usability for complex tasks.

- Architectural Refinements: While maintaining a core Transformer base, each version incorporated optimizations like GQA and improved tokenizers to enhance efficiency and performance.

- Increased Focus on Safety and Alignment: Starting significantly with Llama 2 and further refined in Llama 3, dedicated efforts in fine-tuning (SFT, RLHF variations) and safety protocols became integral to the releases.

- Shift Towards Permissive Licensing: The move from Llama 1’s research-only license to Llama 2 and 3’s broad commercial licenses was pivotal in driving adoption and real-world application.

- State-of-the-Art Ambitions: While Llama 1 aimed for research accessibility, Llama 2 and especially Llama 3 explicitly target state-of-the-art performance within the open-source domain, directly competing with leading models.

Here is a table summarizing the key features across the major Llama versions:

| Feature | Llama 1 | Llama 2 | Llama 3 (Initial Release) |

|---|---|---|---|

| Release Date | February 2023 | July 2023 | April 2024 |

| Key Model Sizes | 7B, 13B, 33B, 65B Params | 7B, 13B, 70B Params (+ Chat variants) | 8B, 70B Params (Instruct-tuned) |

| Max Context Length | 2048 tokens | 4096 tokens | 8192 tokens |

| Training Data Size | ~1.4 Trillion tokens | ~2.0 Trillion tokens | >15 Trillion tokens |

| Training Data Focus | Publicly available, mostly English | Publicly available, improved curation, more factual sources | Massive scale, public, enhanced filtering, more code & non-English data (>5%) |

| Tokenizer Vocab Size | ~32,000 | ~32,000 | 128,000 |

| Key Arch. Features | Transformer, RMSNorm, SwiGLU, RoPE | Llama 1 features + Grouped-Query Attention (GQA) in larger models | Llama 2 features + GQA (all initial models), Improved Tokenizer |

| Alignment/Safety | Basic pre-training | SFT & RLHF for Chat models, GAtt, Safety Fine-tuning | Enhanced SFT, RLHF (PPO/DPO), Improved Safety protocols (Llama Guard 2 etc.) |

| Licensing | Non-commercial (Research focus) | Custom Permissive (Commercial allowed, threshold for large companies) | Llama 3 License (Similar permissive structure to Llama 2) |

| Notable Improvements | Foundational open model for research | Doubled context, GQA, Chat variants, Commercial license, Safety focus | SOTA open performance, Massive data increase, Larger tokenizer, 8K context, Better reasoning/coding |

| Future Models Planned | N/A | N/A | Larger models (>400B), Potential Multimodality |

How Llama 4’s Multimodal AI Beats ChatGPT (Full Breakdown)

Chapter 5: The Llama Legacy and Future Directions

The Llama series has fundamentally altered the AI landscape. By consistently releasing increasingly powerful models under permissive licenses, Meta has fostered a vibrant ecosystem around open-source AI. This has several profound implications:

- Democratization: Researchers, startups, and developers worldwide can now access, study, and build upon cutting-edge AI technology without prohibitive costs or restrictions.

- Innovation: The availability of strong base models like Llama accelerates innovation, as efforts can focus on fine-tuning for specific domains, improving safety, developing novel applications, and exploring new model architectures.

- Transparency and Scrutiny: Open models allow for greater transparency into model behavior, biases, and capabilities, enabling broader community efforts in safety research and alignment.

- Competition: Llama provides healthy competition to closed-source models, pushing the entire field forward and providing users with more choices.

Looking ahead, the Llama journey is far from over. The impending release of Llama 3’s larger models promises further advancements in capability, potentially including the integration of multiple modalities (like image or audio understanding) alongside text. Continued improvements in efficiency, safety, and multilingual support are also likely focuses.

Meta’s commitment to this open approach, while undoubtedly benefiting the wider community, also serves its own strategic interests by fostering an ecosystem where its tools and platforms (like PyTorch) are central, and by gathering insights from the broad usage and adaptation of its models.

Conclusion

From its inception as a tool for researchers to its current status as a state-of-the-art open-source powerhouse, Meta’s Llama series represents a significant chapter in the story of artificial intelligence. Each iteration – Llama 1, Llama 2, and Llama 3 – has marked a deliberate step towards greater capability, efficiency, safety, and, crucially, openness. By providing powerful LLMs to the world, Meta has not only advanced the field but has also empowered a global community to participate in shaping the future of AI. As Llama continues to evolve, it stands as a compelling example of how open science can drive progress and innovation in one of technology’s most transformative domains.

Which AI is Best? GPT-4, Gemini 1.5 or Claude 3 – Complete 2025 Comparison

The Llama 4 herd: The beginning of a new era of natively multimodal AI innovation